Invariance learning with neural maps

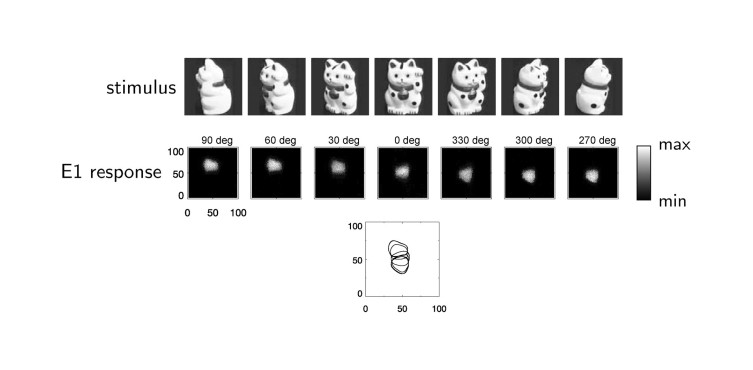

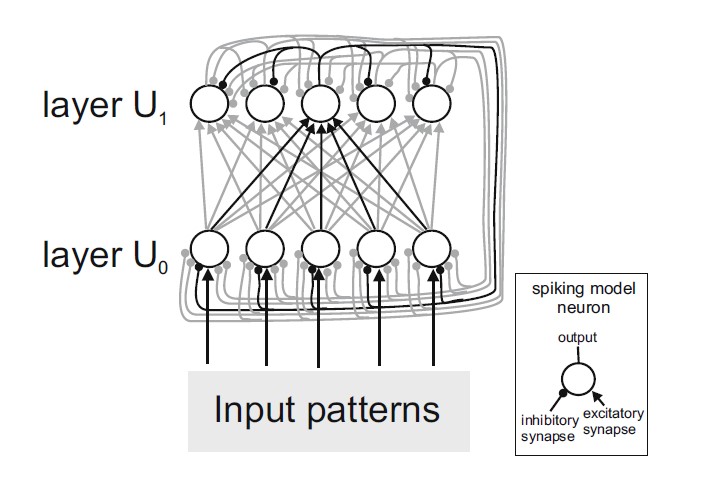

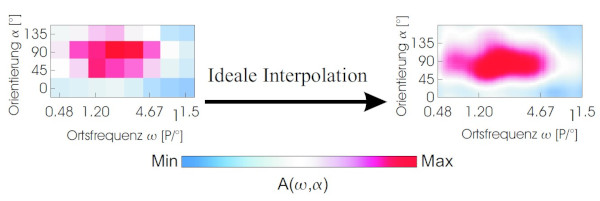

Humans have the ability to quickly and reliably recognize visual objects, regardless of the viewing angle or distance from which the object is observed. For computers, however, this task was long a challenge. Pattern matching algorithms can be used recognize images. But if an object is viewed from a different angle or distance, it generates a completely different light patterns on the retina or in a camera chip (“pixels”). The ability to robustly recognize objects even when there are variations in viewing angle, distance, or lighting conditions is called “invariant object recognition.”