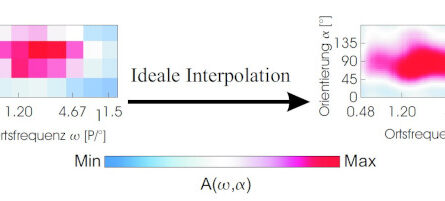

Simple neural network models for unsupervised pattern learning are challenged when the differences between patterns of the training set is small. As part of my doctoral thesis, I investigated how this can be improved by adding adaptive inhibitory feedback connections (Michler, F., Wachtler, T., Eckhorn, R., 2006).

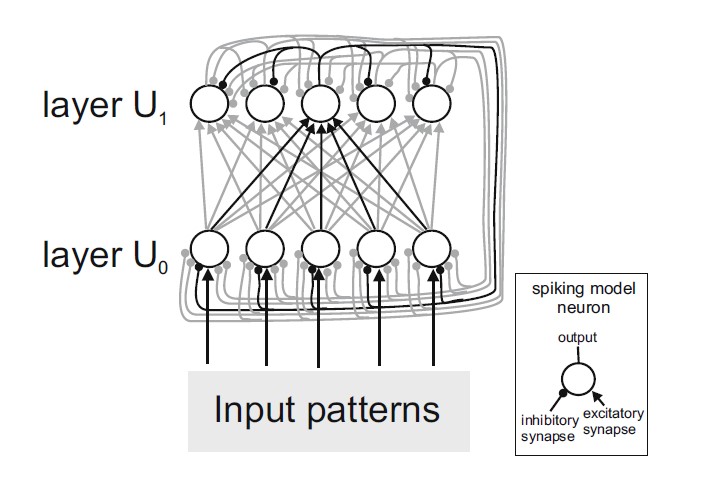

A biologically inspired architecture for pattern recognition networks has the following two important components:

- excitatory feedforward connections that can are adapted by a learning (Hebbian learning, STDP),

- and inhibitory lateral connections, which provide competition between the output neurons.

In this work, I investigated how additional inhibitory connections can improve the ability of the network to discriminate even very similar patterns.

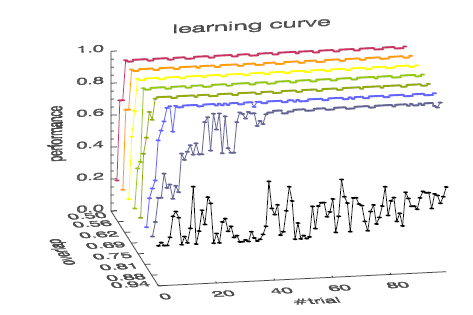

I was able to show that this indeed improves both the learning speed and the discrimination ability of the network.

In this work I used spiking neuron models. This raises the question if (and how) adaptive inhibitory feedback can be utilized in neural network models with rate based model neurons. Such model neurons are used, for example, in “convolutional neural networks” (CNNs) and in the currently popular transformer networks (large language models, “LLMs”).